As the scale and complexity of AI infrastructure grows, data center operators need continuous visibility into factors including performance, temperature and power usage. These insights enable data center operators to actively monitor and adjust data center configurations across large-scale, distributed systems — validating that these systems are operating at their highest efficiency and reliability.

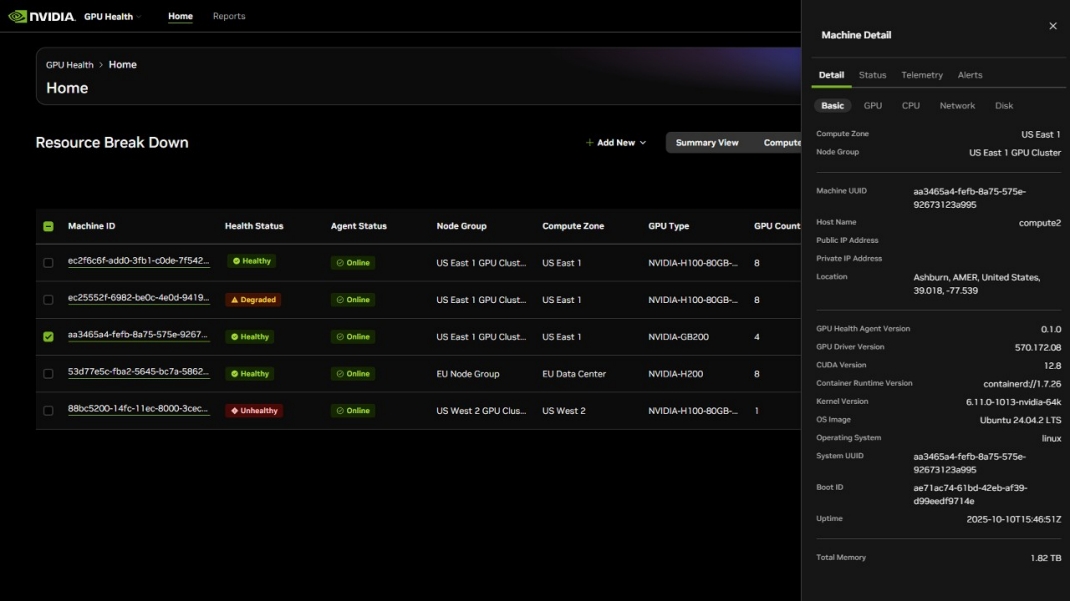

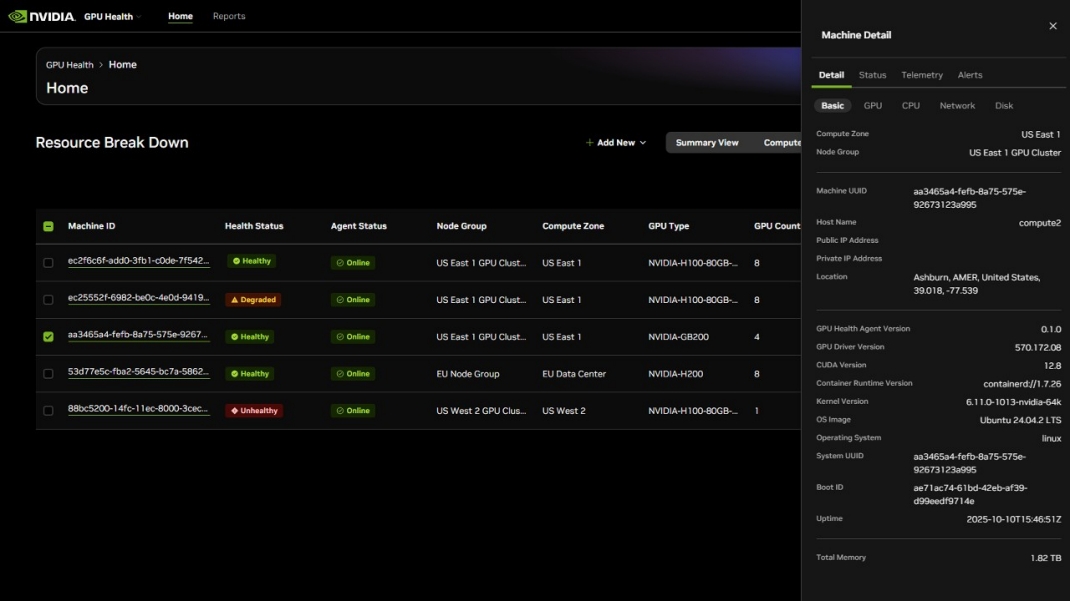

NVIDIA is developing a software solution for visualizing and monitoring fleets of NVIDIA GPUs — giving cloud partners and enterprises an insights dashboard that can help them boost GPU uptime across computing infrastructures.

The offering is an opt-in, customer-installed service that monitors GPU usage, configuration and errors. It will include an open-source client software agent — part of NVIDIA’s ongoing support of open, transparent software that helps customers get the most from their GPU-powered systems.

With the service, data center operators will be able to:

- Track spikes in power usage to keep within energy budgets while maximizing performance per watt.

- Monitor utilization, memory bandwidth and interconnect health across the fleet.

- Detect hotspots and airflow issues early to avoid thermal throttling and premature component aging.

- Confirm consistent software configurations and settings to ensure reproducible results and reliable operation.

- Spot errors and anomalies to identify failing parts early.

These capabilities can help enterprises and cloud providers visualize their GPU fleet, address system bottlenecks and optimize productivity for higher return on investment.

This optional service provides real-time monitoring by each GPU system communicating and sharing GPU metrics with the external cloud service. NVIDIA GPUs do not have hardware tracking technology, kill switches and backdoors.

Open-Source Agent Offers Insights for Data Center Owners

The service will feature a client software agent that the customer can install to stream node-level GPU telemetry data to a portal hosted on NVIDIA NGC. Customers will be able to visualize their GPU fleet utilization in a dashboard, globally or by compute zones — groups of nodes enrolled in the same physical or cloud locations.

The client tooling agent is also slated to be open sourced, providing transparency and auditability. It’ll offer a working example for how customers can incorporate NVIDIA tools into their own solutions for monitoring GPU infrastructure — whether for critical compute clusters or entire fleets.

The software provides insight into a company’s GPU inventory but cannot modify GPU configurations or underlying operations. It provides read-only telemetry data that’s customer managed and customizable.

The service will also enable customers to generate reports that detail GPU fleet information.

As AI applications grow in number and complexity, modern AI infrastructure management is evolving to keep pace. Making sure that AI data centers are running at peak health is vital as AI revolutionizes every industry and application. This software service is here to help.

Register for NVIDIA GTC, taking place March 16-19 in San Jose, California, to learn more.

See notice regarding software product information.